RENOR & Partners è una società di consulenza d'impresa con alti standard qualitativi e con i prezzi più bassi del mercato.

RENOR & Partners S.r.l.

Via Cicerone, 15 - Ariccia (RM)

info@renor.it

AI, CV, and Spectroscopy for Waste Management – Part 1

AI, CV, and Spectroscopy for Waste Management – Part 1

This post is also available in:

Italiano (Italian)

Do you also find waste sorting boring?

I bet it’s happened to you too: after a dinner with friends or a family lunch, the time comes to clean up, and suddenly waste sorting is there, waiting to eat up your time. Paper in one bin, plastic in another, glass and metal in a different container, and organic waste in the smallest one… A task that often becomes tedious—especially when the amount of waste to sort increases significantly.

Hence the question… Is there a way to make all of this simpler, faster, and even automatic? Can today’s technology give us real, practical help in managing this everyday task, saving us both time and effort?

How a Technological Project Is Born: From Idea to Final Product

During this period, with the heavy workload I’m currently managing, it will be difficult to find enough time to dedicate to this project. However, I’ve decided to use the few free moments I have in the evening after dinner to carry it forward and share it with you step by step.

Beyond the intrinsic usefulness of this project, what interests me most is showing you how, starting from an initial idea, one can concretely reach the final product. I want to take you through the stages involved in the realization of a project that, at first glance, may seem simple in theory, but is in fact extremely complex—because it requires a broad range of skills that go far beyond just writing code.

To complete this work, in fact, one needs in-depth knowledge in several fields: from 3D modeling to 3D printing, from prototyping to electronics, all the way to expertise in physics, mathematics, and above all, artificial intelligence.

In this article (and in those that will follow), I will try to guide you through this entire journey, sharing with you the challenges, obstacles, and solutions I encounter along the way. It will be an interesting way to discover together how an innovative idea can be transformed into something truly functional, and we will share it as an open-source project on my GitHub channel.

Let’s assess the initial critical issues.

We all rely on our senses to explore and interpret the world around us. When we hold an object in our hands, we can observe it, touch it, smell it, or even tap it lightly to hear the sound it makes. Sometimes, we might even taste it to perceive its flavor. We can think of our senses as gateways that allow us to connect and interact with the world.

Imagine receiving a bottle: just by looking at it or, at most, holding it in your hands, you can immediately tell whether it’s made of glass or plastic. If, on the other hand, someone handed you a can of peeled tomatoes, you’d likely notice the distinctive metallic color, understanding that it’s made of metal. And if you weren’t sure, you might squeeze it to check whether it stays deformed—because you know that metal, when compressed, tends to retain its shape, unlike plastic, which—within certain limits—returns to its original form.

All of this is possible thanks to the cognitive experience we have developed throughout our lives.

But in the case of a machine… how can we transfer this ability to understand? How can it learn to identify the material an object is made of?

Let’s emulate the senses.

There are several approaches…

One of the most fascinating approaches is based on Computer Vision, a technology that emulates the human sense of sight.

This approach involves using specialized artificial neural networks that are trained to recognize various objects, materials, and shapes. It’s exactly the same principle that allows modern self-driving cars (without mentioning any well-known brands) to navigate safely on our roads.

These vehicles are equipped with cameras that continuously analyze everything around them—hundreds of times per second: roads, traffic signs, pedestrians, other vehicles, and potential obstacles. The neural network, carefully trained over months or even years, enables the vehicle to identify each object and respond appropriately: staying within its lane, strictly obeying traffic signs, stop signals, and right-of-way rules, avoiding collisions, and calculating the safest escape route in case of danger.

The first obstacle: not all neural networks are easy to train

If you’ve followed the discussion this far, you’ve surely noticed an important detail: we just mentioned neural networks trained for months, if not years. And this is where the first real challenge of our project arises.

The issue, in fact, is far from simple. We are not teaching a machine to recognize a pedestrian—something with a head, two arms, and two legs. Here, we need to train a neural network to understand exactly what material a piece of waste is made of. And the difficulty increases when we consider that the same product—like milk, for example—can be packaged in a plastic bottle by one brand, in a glass bottle by another, or in a Tetra Pak by yet another.

If we wanted to train a neural network to accurately recognize all packaging materials for every single food item on the market, it would mean collecting and cataloging tens of thousands—if not hundreds of thousands—of different samples. We would need to go over each brand and each product variation multiple times, generating enormous amounts of training data.

It’s clear that this path is neither practical nor sustainable. We must therefore look for a smarter, more flexible, and scalable solution—one that allows the neural network to “generalize” everything that can be generalized, recognizing materials and objects based on general characteristics rather than those specific to each individual product.

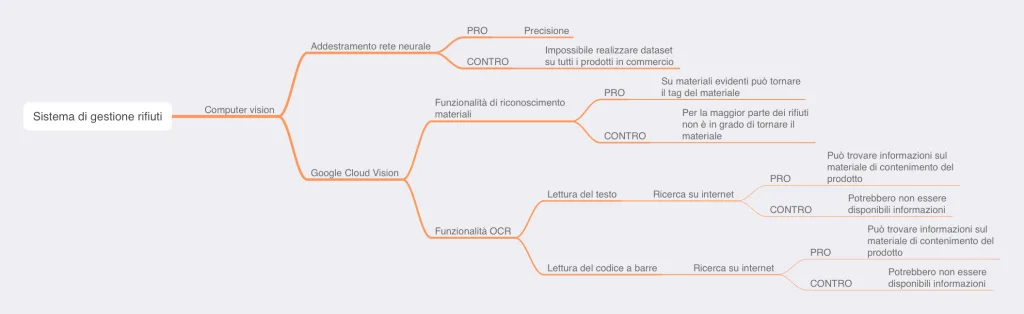

Some reinforcement ideas

Although it is difficult to train a neural network for certain types of waste, we can still submit the image of the waste to a pre-trained neural network. Google Cloud Vision, for example, allows image analysis and, in cases where the material is visually very recognizable, it can assign generic labels such as:

- plastic

- glass

- metal

- carboard

- paper

The problem is that it’s not 100% reliable—it doesn’t distinguish specific variations (e.g., Tetra Pak vs. plain cardboard). It can’t read materials based on texture or sound, as a human would by manipulating the product.

However, there is a realistic alternative solution. A more reliable approach could be to use Cloud Vision OCR to read the text on the product label, and then search online for information about the material (e.g., PET bottle, Tetra Pak packaging).

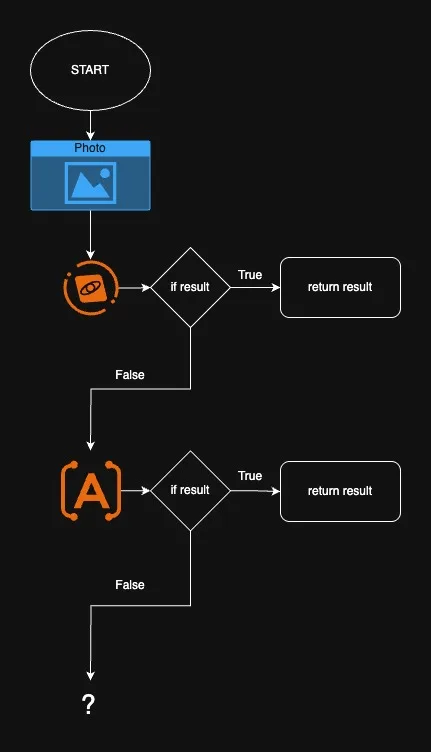

Let’s start outlining.

To avoid the risk of forgetting anything, let’s start by drafting a mind map and a functional diagram so we can adjust and update it as we move forward with the project.

What if this solution doesn’t yield a reliable result?

It’s possible that, despite all efforts, the system still doesn’t return a reliable and definitive result. So how do we handle that?

- We can place the product in a manual verification state (a solution I’m not particularly fond of).

- We can classify the waste as non-recyclable (although this solution isn’t particularly eco-friendly either).

- We can look for other ways to understand the material of the product.

Let’s focus on the third solution.

What other possibilities are there for identifying a material?

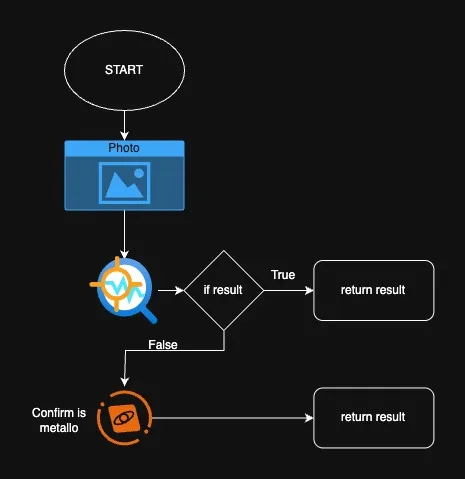

A safe, fast, and real-time system for identifying a material is certainly spectroscopy—specifically, the Raman spectroscope. This represents one of the most reliable and precise techniques for identifying the material, particularly the chemical composition of an object, even in solid, liquid, or polymeric form.

Raman spectroscopy is based on the interaction of a laser light, at a specific frequency, with the molecular vibrations of a material. When the laser hits a sample, a small portion of the light is scattered in an anomalous way (the Raman effect), and this scattered spectrum is characteristic of the molecular structure of the material.

What can a Raman spectroscope identify?

It can successfully identify plastic, glass, paper, organic and inorganic compounds, and even Tetra Pak. However, it cannot identify metals because metals reflect light and therefore do not produce Raman signals. This may not be a problem, though, because by exclusion—if a material is not among the listed ones—it is almost certainly metal.

There are, however, some challenges here as well… A Raman spectroscope can cost, depending on its quality, from a few thousand euros to tens of thousands. But there’s a solution to this too: building our own Raman spectroscope. We’ll explore this part later in a dedicated series of articles.

Let’s update our diagrams accordingly…

It seems that, in this way, we have correctly managed the application logic of the system. We have broadly understood how we need to proceed.

Now there’s the entire section on waste transport and sorting, which we will address in the next article.

- Want to save the planet? Make them work from home, you moron! - June 22, 2025

- Will AI make people lose jobs? - June 17, 2025

- Evaluation of the level of Blur by Laplacian Variance - June 15, 2025